Safety, Defensive Driving, and the Future of Transportation

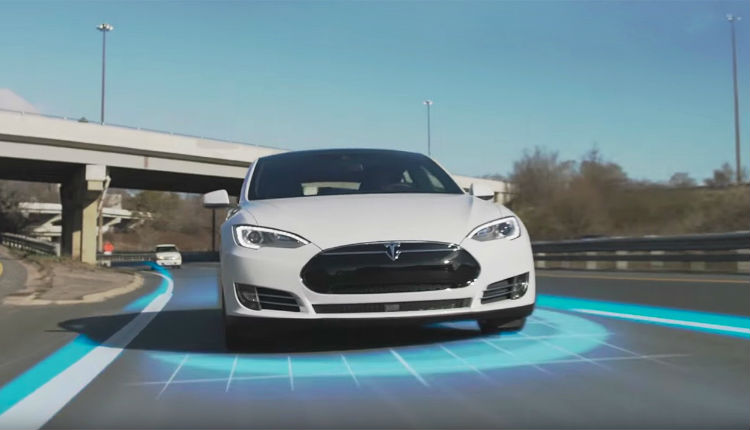

Every day we inch closer toward a big, bright, technologically driven future. Mobile and card payments are becoming more popular than cash. More and more communities are getting connected to the world around them with internet access, and we are seriously considering the prospect of colonising Mars. Along with all of these other technological advancements comes partially and fully autonomous vehicles — and all of the implications that they bring, both positive and negative.

How will these technological marvels affect the future of road safety and transportation as a whole? Let’s take a closer look at how self-driving cars might pave the path forward.

Bicycle and Foot Traffic

The future we have been promised in science fiction film and literature is filled with amazing technology that makes our lives easier. Despite the dubious fashion choices often depicted in the genre, the future is shown to be filled with hoverboards, self-lacing shoes, and in some cases a conspicuous lack of any restaurant that isn’t Taco Bell. Whether the future we see is an authoritarian dystopia or a pleasant utopia, it is usually seen as a much safer place.

However, even with all of the vast technological improvements we have already seen over the last century, the humble bicycle has always remained a popular mode of transportation. These simple, affordable, and human-powered machines likely won’t fall out of favour any time soon, even if they remain a pretty dangerous way to get from point A to point B. Bike safety is still a huge point of contention in a lot of communities, where laws can seem tricky to fully understand for both bike riders and vehicle drivers alike.

While human operators might see bicycle riders as a nuisance on their daily commute, they at least know right from wrong and are willing to brake for a cyclist. Autonomous vehicles, though, have been shown not to provide cyclists the same courtesy. In 2018, a 48-year-old woman riding her bicycle was struck and killed by an autonomous vehicle owned by Uber, with the vehicle apparently sensing her presence and flagging the detection as a “false positive.” The vehicle in question apparently made the decision not to swerve to avoid the woman, leading to a tragic loss of life.

Autonomous Vehicles and Liability

The reality of the situation is that we are quite a ways off from more than a handful of autonomous vehicles on our roadways. The vast majority of American adults surveyed said that they were too wary of safety concerns to ride in a fully autonomous vehicle. Despite this apprehension from the public, companies like Google, Uber, and Waymo continue to roll out city-based testing programs in order to try and perfect their autonomous vehicles.

One issue with this insistence on continuing testing efforts is that of liability when crashes do occur. The general rule of thumb for these companies seems to be to deny almost any culpability in the case of an incident, whether the evidence points to it being their fault or not.

This leaves people affected by these crashes scrambling to find some recourse, looking for some sort of closure. The family of the Arizona woman who was struck and killed by the autonomous Uber vehicle, for example, is now suing both the city of Tempe and the state of Arizona for allowing testing to take place before and after the accident.

Liability issues also pop up in other, less expected ways. When we hear of crashes involving autonomous vehicles, we tend to think that the driverless car must be at fault. However, an alarming number of these accidents are caused by regular human drivers rear-ending autonomous vehicles. Autonomous vehicles behave in rational, structured ways, which makes their actions hard to predict for human beings, believe it or not.

Driving Under the Influence

While self-driving cars have the potential to make our roads safer, they really only do so when the majority of the cars on the road are also autonomous. Self-driving vehicles can communicate with each other and rely on the other cars around them to obey traffic laws and make reasonable decisions. Manufacturers like Tesla are even using big data, along with predictive analytics software, to power AI decision-making. By analysing vast banks of data, this AI is able to make safer decisions and chart more efficient paths between destinations.

Humans, however, tend to act a bit more, well, human when it comes to self-driving vehicles.

Shortly after Tesla rolled out their “autopilot” assisted driving feature, the internet blew up with videos of people abusing the feature doing everything from pretending to take a nap to getting stoned. Driving under the influence is never a good idea, and the allure of having a autonomous vehicle to get you home after a night out might attract quite a few people. Technology is a double-edged sword; while it can help addicts achieve sobriety, it can also be used to enable problematic behaviours. Alcoholism is no laughing matter, and a self-driving vehicle won’t save anyone from a potentially horrific accident.

The truth is, humans are the leading cause of self-driving car accidents. Humans are fallible. We make mistakes. And when we consume too much alcohol, those mistakes become more frequent, no matter the situation. All in all, people shouldn’t rely on self-driving vehicles to get them home safe after a night of drinking and instead use the tried-and-true method of hailing a cab or contacting a ride service.

At the end of the day, the future’s still bright, and we have a lot of potential at our fingertips when it comes to transportation. However, we need to keep in mind that technology doesn’t always advance as fast as we would like it to. Self-driving cars are not yet the completely safe alternative to driving that we have been waiting for. Despite this, it doesn’t hurt to keep looking forward. Someday we will live in that driverless utopia, sooner or later.