I Built a Mental Health Chatbot to Meet the Needs of Irish Students – And You Can Too

Mental health has undergone a transformation in Ireland over several decades – in strategies for change and awareness, in wider discussions about mental health in our own lives and, in particular, for the lives of students.

In colleges and universities around Ireland students are offered free counselling services. There is Mental Health Awareness Week, which runs from 22 November. There have been calls for greater government investment in student counselling services made by the Union of Students in Ireland (USI). And two months ago a project designed to address young people’s mental health in Irish third level education was launched.

At the core of these strategies is the desire to support students’ wellbeing. These services are invaluable support systems, yet not all of these services offer 24/7 communication with students, and they can unfortunately be limited by time and funding. This is where chatbots can become part of the solution.

Bot technology is emerging as a potential mental health aid. Recently Facebook Messenger launched Woebot, a chatbot for mental health. Woebot doesn’t replace therapy, but offers a friendly point of contact for anyone who feels like they need someone to talk to. The benefits of chatbots as ‘digital interventions’ as an option for people who don’t want face-to-face counselling, or who need support outside of regular hours, include instant access to self-help resources and an early diagnostic tool for screening.

Mental health is on our minds more than ever, yet in the Healthy Ireland Survey 2016, 10% of respondents indicated that they may have a mental health problem and 20% said they currently have, or have had, a mental health problem. Of the total number of suicides in Ireland last year, 11.5% of these were men and women aged between 15 and 24. Chatbots are no substitute for human interaction and therapies, however research into their capabilities show that they could provide effective support for users.

There’s a lot of literature on the web about machines and empathy, and AI as psychotherapy. It might seem a bit sci-fi and George Lucas, but a chatbot for student mental health doesn’t have to be complicated or have a computer animated face. It can be easy, and it is. Companies like Snatchbot.me provide the tools and the advice to help you and I make our own chatbots, which means strategies to support students’ wellbeing can be developed at a community level, with added time and cost benefits. This guide will help you get started on building a bot that’s relevant and responsive to the needs of students’ mental health and wellbeing.

To start? You’ll need some chatbottechnology. I used Snatchbot.me to make my mental health chatbot. I’m not going to describe how to use this software, as there is a built in guide on your account and you can also check out their YouTube channel for additional help and tutorial videos. It’s very straightforward and easy to use once you have the basics.

The point of this mental health bot is to act as a 24 hour, non-human point of support and communication with students who need to talk, to remind students of the importance of speaking about their mental health, and to direct students to mental health self-help services, hotlines and websites.

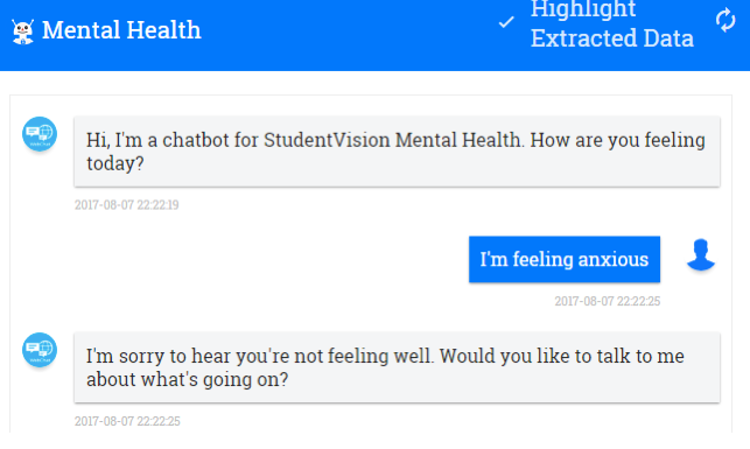

You’ll need to keep your bot simple. Before I actually started to make the chatbot I wrote out on some paper the sort of pathways I would expect from the conversation. Start from the beginning: “Hi, I’m a chatbot for StudentVision Mental Health. How are you feeling today?’’, or something along these lines is fine. It’s a good idea to sketch out possibilities for your dialogue so you end up with a map that you can follow when you make your chatbot.

![]()

Here’s some examples from my bot:

For positive responses to the first interaction, such as, “I’m feeling fine/good/okay…etc.’’ my bot expresses joy and appreciation that it is being told this. This is followed by a question, “Will you let me know if this changes?’’ If the user responds in the affirmative, the conversation ends with “thank you xx’’. If the user responds in the negative, my bot expresses concern and the desire for the user to reconsider their choice.

This is where you need to be careful not to be forceful or prescriptive. If they do reconsider, then the conversation ends in the same way as before. If not, my bot again expresses concern, but also provides alternatives, such as a phone number to a help line, or a link to a website.

What comes up again and again in various ways when the user responds in the negative, is a reminder that speaking about problems can help make them easier to deal with. I made a loop in my bot to direct users to self-help services if the user responds negatively to everything. Of course, mine was just a test bot. If you want to be serious about making your bot responsive to users’ needs, then you’ll have to come up with more responses and pathways.

When the user of my bot responds to the initial greeting with, “I’m feeling terrible/alone/anxious,’’ or any negative emotion, the bot expresses concern and asks them if they would like to talk about it. Again, prepare positive and negative pathways for users. Or even a middle ground, for “I don’t know’’ answers. And remember to direct users towards appropriate websites and services.

What do students feel stressed about? What issues affect them? What services are they aware of? What times of the year do students most often need support services? How do you think students feel talking to a chatbot about depression, self-harm, about feeling down or isolated? What language would you use and what language should you prepare your bot to recognise?

Put yourself in the place of the user. This chatbot isn’t going to be able to emulate empathy, but it can make every effort to make users feel comfortable enough to discuss their feelings, with a sense that the service itself truly cares about what they have to say and wants to help.

Use natural, conversational language. Don’t overload responses with too many words or sentences. Ask one question at a time and try not to be prescriptive in the advice you give – it’s a bot not a human, and the user knows this so, don’t try to make it something is isn’t. Make your bot aware of this too by including a response that demonstrates some self-awareness. And don’t shy away from adding a bit of humour, or an image where appropriate.

During the four years I spent as an undergraduate, I used the free counselling services that were provided. During my year as a postgraduate, I didn’t. I wanted to and I needed to, but I couldn’t make the time to arrange a face-to-face meeting.

Research from the USI shows that 74% of students in Ireland fear that they willl experience negative mental health in the future. Having a friendly neighbourhood chatbot for students who can’t make the time could, potentially, make a huge difference to their lives.

Image via Yvonne Kiely.